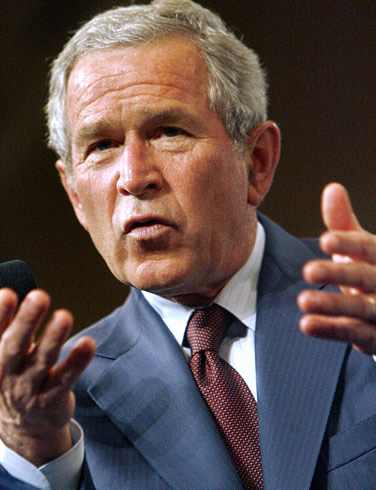

On January 8th, 2002 George W. Bush signed into law an education reform bill known as the No Child Left Behind Act of 2001. This law supported standards based educational reform to ensure that all students attained at least some mastery of their subjects before moving on to the next grade. Gone were the days of just passing along students regardless of their capabilities. I propose that this approach be applied to data migration to ensure that adequate standards are adhered to by product data before it is moved to a new system. Many companies today believe that no data left behind means just that. However, blindly moving information from one system to another creates challenges in the migration itself as well as hindering the effectiveness of the new product lifecycle management (PLM) system. This article will discuss the issues around data quality and its impact on data migration and PLM efficiency.

We received a lot of great feedback on my article "What's the big deal about data migration?" so I thought we would take a deeper dive into one of the points I made in the article. The section titled "Garbage in Garbage out" drew a lot of specific thoughts mainly around the idea that most companies only leverage recent data and that companies should be very selective when moving information over into a new system. Typically, when we engage with clients for data migration projects they are reluctant to leave legacy data behind in their old systems but rarely have an in depth understanding of what the old information consists of. Often we find it is poorly organized since a majority of it was created prior to the propagation of PDM and PLM tools. We also find that there is very little meta-data associated with the information since standards were not in place when the information was created. Worse we may encounter situations where the information is overlapping or different terminologies are used for the same attributes. Sometimes simple mapping scripts can address this but first you have to understand the extent of the issue which can be very time consuming. The point being that it takes a lot of effort to condition this data for migration and the payoff for doing so is usually minimal since most companies rarely access information over three years old. I realize this can be dependent on the type of products a company develops and how long they have been in operation but the rule generally holds true. So the question becomes how do we minimize or automate the cleansing and conditioning of older data to reduce the impact to the overall migration effort?

In the discussion thread on the Agile PLM Experts group in Linked in Atul Mathur suggests moving product record data based on product families with particular scrutiny towards how much demand there is for the data. By breaking it up into product groups it allows a company to make things more manageable when it comes to reviewing the condition and necessity of the information. Unfortunately, consulting companies are not particularly useful when it comes to cleansing or evaluating legacy data. We can check for attributes and run scripts looking for duplicate file names but most of the evaluation is somewhat subjective. It is best for someone familiar with the information to assess its readiness to be moved over. We can automate this somewhat and even develop test plans to ensure that it is done systematically but it requires a time investment on the client's part. In many of these engagements the company determines the task is too formidable and that it would be best if we just hauled it all over en masse. This is a big mistake since the data will most likely be of dubious value and it will burden the new environment with excess information which can affect performance. In the same thread on the Agile PLM Experts group, Srinivas Ramanujam points out that clients can really begin legacy data assessment at any point. He thinks that data cleansing should begin prior to implementation of a new system. Since companies have access to their information they should be best qualified to evaluate it and that the amount of time a company spends on this activity should result in a significant reduction of risk to the success of the data migration. I agree with both these points and think that companies should take advantage of their access to their information and begin to assess its worthiness well before beginning any kind of upgrade or change. I also think that looking for way to segment the data can make the task more manageable.

In the discussion thread on PLM PDM CAD Network also on Linked in there were numerous comments about the article but one theme emerged. Patrick Chin pointed out that many times legacy data doesn't adhere to current processes and that it is possible it is not even reusable. He makes one of my favorite points that if you automate process around bad information you just make things worse more efficiently. He also points out that data cleansing tools do not have the discernment necessary to categorize the information properly. New architectures can further exacerbate these issues. He points out that forcing the data into the new architecture without consideration of its state can lead to long term confusion and compromise the integrity and viability of the new system. I really liked Jim Hamstra's thoughts on designing the system and the migration approach on the premise that all legacy data is flawed until proven otherwise. His thought was rather than try to clean the data prior to moving it over use the system to help you clean it as you go. I think this approach could work with the proper amount of predesign and with the right process and data segmentation. The new system will offer better search tools and data mining solutions that can help you identify problem areas. This is somewhat tied into the approach I advocated in my article, "The Cart before the Horse? Using PLM to Design Business Process". You could set up the target system and migrate information over time cleaning it as you populate it into the new system. This approach can have some limitations but it is definitely worth considering. Robert Miller also chimed in on this thread to stress the necessity of considering data migration early in the PLM adoption process and evaluating the new system in comparison to the legacy system to potentially minimize some of the migration problems you may encounter.

The takeaways from this discussion are that leaving data behind is not necessarily a bad thing since it can corrupt and handicap your new system. Spending time up front to fully assess your information is time well spent. Segmenting data and moving it over in portions is a viable strategy for facilitating cleanup and assessment. Using the target PLM system as a filter and cleaning mechanism can be an effective way to manage migration. I wanted to thank all of the group members on Linked in who were willing to share their expertise and hopefully we all have come away with a better understanding of how to deal with legacy information when adopting a new PLM system. By being flexible and open minded I suspect our new standards for data migration will yield better results than the education reforms currently in place.